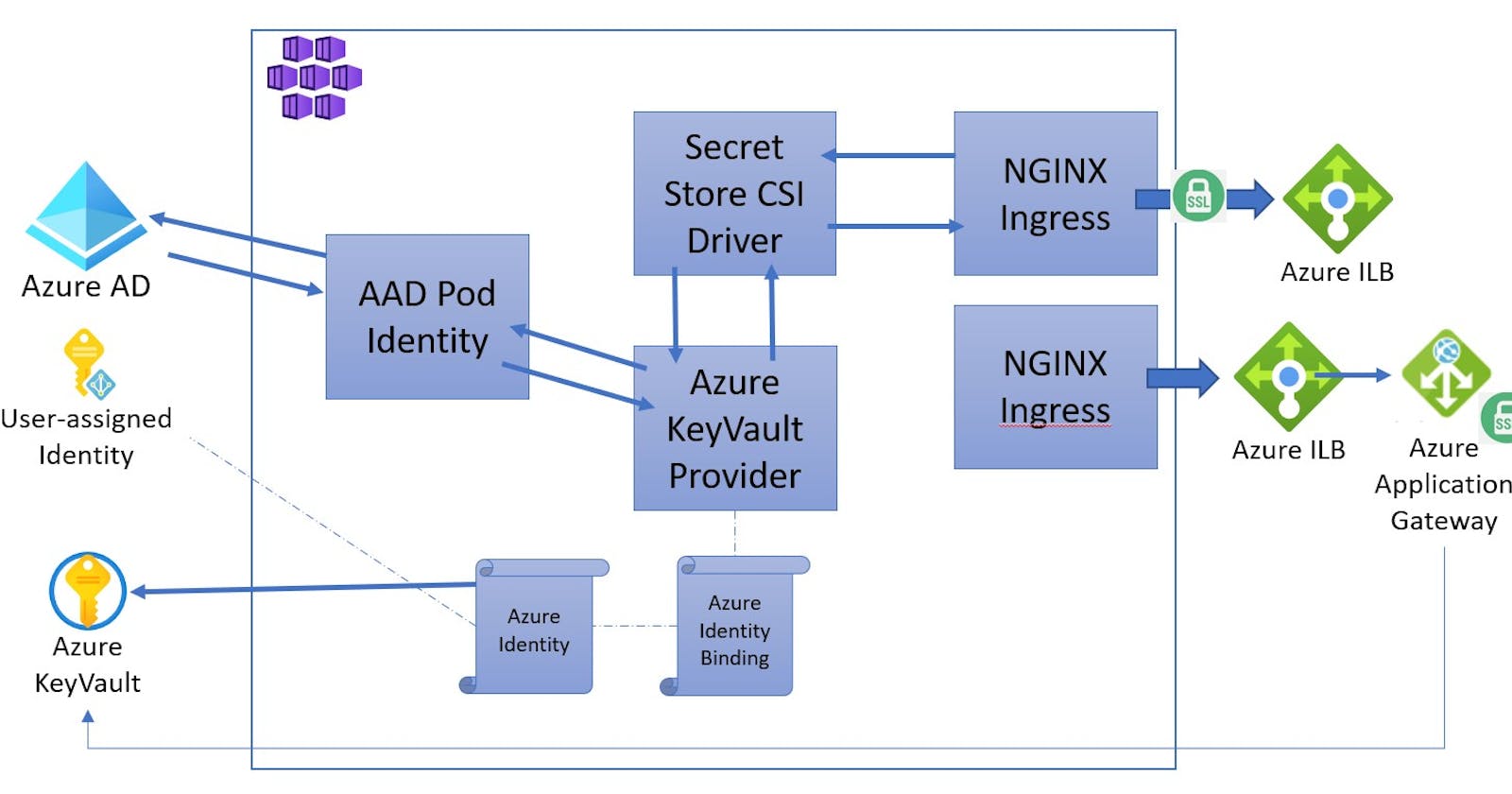

AKS with multiple nginx ingress controllers, Application Gateway and Key Vault certificates

Intro

In the previous blog post we created an AKS cluster with an nginx ingress controller and certificates retrieved from Azure Key Vault.

For this blog post we will extend that previous setup and include;

- Deploy Azure Private DNS

- Deploy two nginx ingress controllers running in the cluster (one for internal the other for public traffic. Both with internal ip adresses)

- Deploy one Application Gateway as the entry point for the public traffic, that will be integrated with Key Vault and do SSL termination

To follow this blog post it is required that you have an AKS cluster and the components discussed in the previous post installed

1. Azure Private DNS

Create Azure Private DNS resource

#Setup some variables that we are going to use throughout the scripts

export SUBSCRIPTION_ID="<SubscriptionID>"

export TENANT_ID="<YOUR TENANT ID>"

export RESOURCE_GROUP="<AKSResourceGroup>"

export CLUSTER_NAME="<AKSClusterName>"

export REGION="westeurope"

export NODE_RESOURCE_GROUP="MC_${RESOURCE_GROUP}_${CLUSTER_NAME}_${REGION}"

export IDENTITY_NAME="identity-aad" #must be lower case

export KEYVAULT_NAME="<KeyVault Name>"

export DNS_ZONE="private.contoso.com"

export PUBLIC_DNS_ZONE="public.contoso.com"

az network private-dns zone create -g ${RESOURCE_GROUP} -n ${DNS_ZONE}

az network private-dns zone create -g ${RESOURCE_GROUP} -n ${PUBLIC_DNS_ZONE}

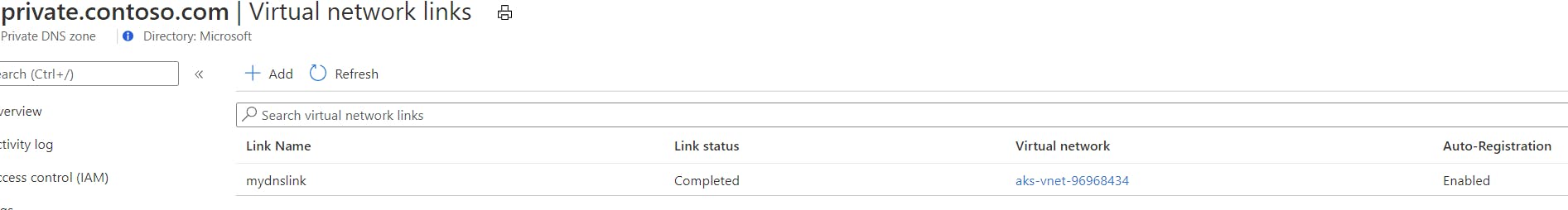

Link the private DNS zone with the AKS Virtual Network (Vnet that was created by AKS). This will allow for resources in that virtual network to resolve the DNS name.

You can get the AKS Vnet Resource Id by going into the Node Resource Group that was created by AKS, the naming convention is MC_<resource group name>_<aks name>_<region>. Click the Virtual Network resource and copy the Virtual Network Resource Id from the properties.

az network private-dns link vnet create -g ${RESOURCE_GROUP} -n MyDNSLink -z ${DNS_ZONE} -v <AKS Vnet Resource ID> -e true

#register public dns zone - this time auto register is false because only one link can be true in the same vnet

az network private-dns link vnet create -g ${RESOURCE_GROUP} -n MyDNSLink -z ${PUBLIC_DNS_ZONE} -v <AKS Vnet Resource ID> -e false

When finished you can check the link was created in the Private DNS Zone

2. nginx ingress controller

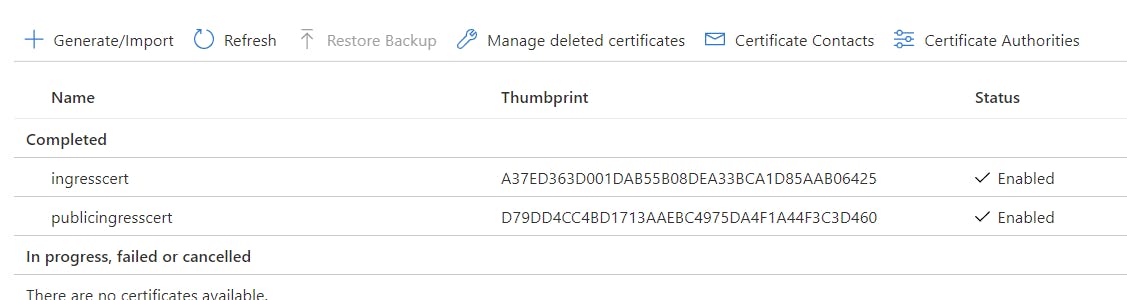

First let's create a new certificate and import it to Key Vault. The other certificate for the DNS_ZONE was created in the previous post

export CERT_NAME=publicingresscert

openssl req -x509 -nodes -days 365 -newkey rsa:2048 \

-out ingress-tls.crt \

-keyout ingress-tls.key \

-subj "/CN=${PUBLIC_DNS_ZONE}"

openssl pkcs12 -export -in ingress-tls.crt -inkey ingress-tls.key -out $CERT_NAME.pfx

# skip Password prompt

az keyvault certificate import --vault-name ${KEYVAULT_NAME} -n $CERT_NAME -f $CERT_NAME.pfx

If continuing from the last post you should have two certificates, with the addition of the newly created publicingresscert

In this article I wont be creating a new identities and will reuse the same identity created in the last post

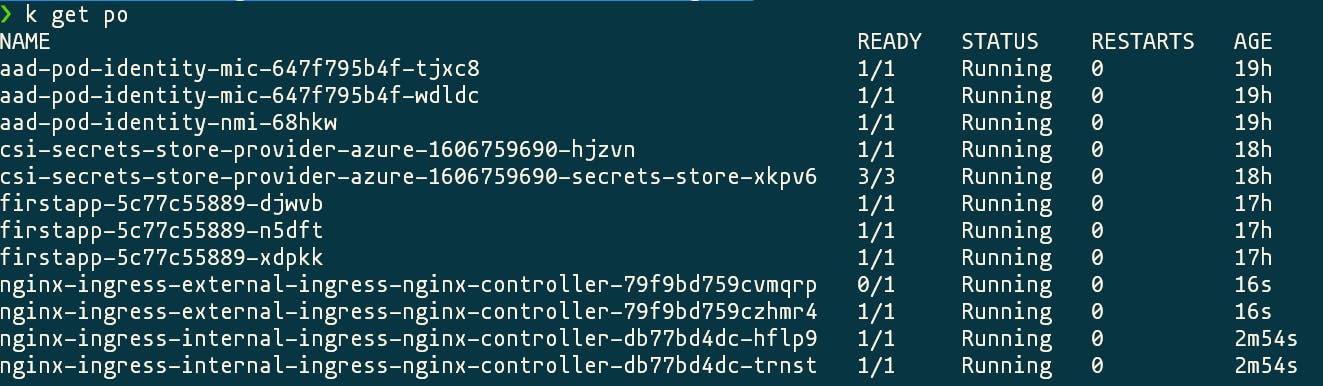

Now lets configure the ingress controllers. To be able to run multiple ingress controllers you need to configure the ingress class and add the correct annotation to the ingress to map to the correct ingress controller. More info

Install or upgrade the helm release (if continuing from the previous post)

# internal ingress controller - set ingress class = nginx-internal

helm install nginx-ingress-internal ingress-nginx/ingress-nginx \

--set controller.replicaCount=2 \

--set controller.ingressClass=nginx-internal \

--set controller.podLabels.aadpodidbinding=${IDENTITY_NAME} \

-f - <<EOF

controller:

service:

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

extraVolumes:

- name: secrets-store-inline

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: "ingress-tls" #name of the SecretProviderClass we created above

extraVolumeMounts:

- name: secrets-store-inline

mountPath: "/mnt/secrets-store"

readOnly: true

EOF

#external ingress controller - set ingress class = nginx-external

helm install nginx-ingress-external ingress-nginx/ingress-nginx \

--set controller.replicaCount=2 \

--set controller.ingressClass=nginx-external \

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-internal"=true

As you can see in the scripts each ingress controller has an ingressClass and the annotation for internal load balancer defined.

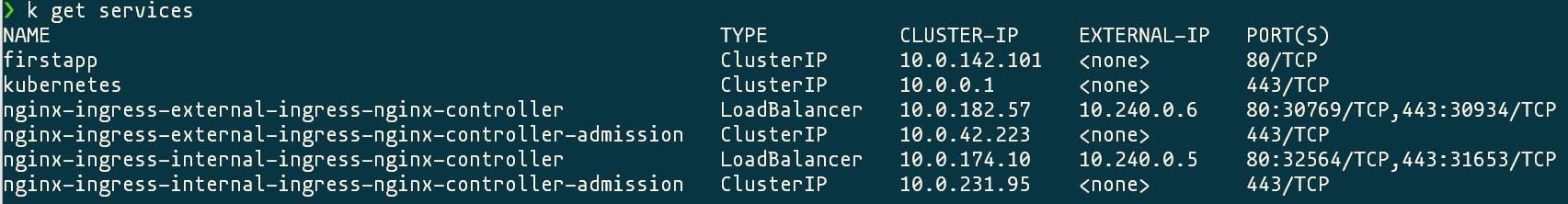

After the install succeeds you will have 4 new pods, two for the internal ingress and two for the external ingress because we defined 2 replicas.

There are also 2 new services with internal ips from the range of your virtual network.

Now lets created both ingresses, remember the ingress class we defined for each ingress controller? Now is the time to use that property.

#deploy the second demo app

kubectl apply -f https://raw.githubusercontent.com/hjgraca/playground/master/k8s/nginx/secondapp.yaml

#internal ingress

cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx-internal

name: internal-ingress

namespace: default

spec:

tls:

- hosts:

- $DNS_ZONE

secretName: ingress-tls-csi # the secret that got created by the secret store provider and assigned to the ingress controller

rules:

- host: $DNS_ZONE

http:

paths:

- backend:

serviceName: firstapp

servicePort: 80

path: /

EOF

#external ingress, without TLS, the TLS is taken care by Application Gateway

cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx-external

name: external-ingress

namespace: default

spec:

rules:

- host: $PUBLIC_DNS_ZONE

http:

paths:

- backend:

serviceName: secondapp

servicePort: 80

path: /

EOF

Once installed we can get the ingresses, the list will show the hosts and the binding ip, if you describe one of the ingresses you can see what service and pods it is attached to.

I found that its better to wait for one ingress controller to be fully installed and only then move to the next one.

Let's test the endpoints

Firstly lets add those ip addresses to the Private DNS Zones we created earlier

az network private-dns record-set a add-record -g ${RESOURCE_GROUP} -z ${DNS_ZONE} -n @ -a <ip address of internal ingress>

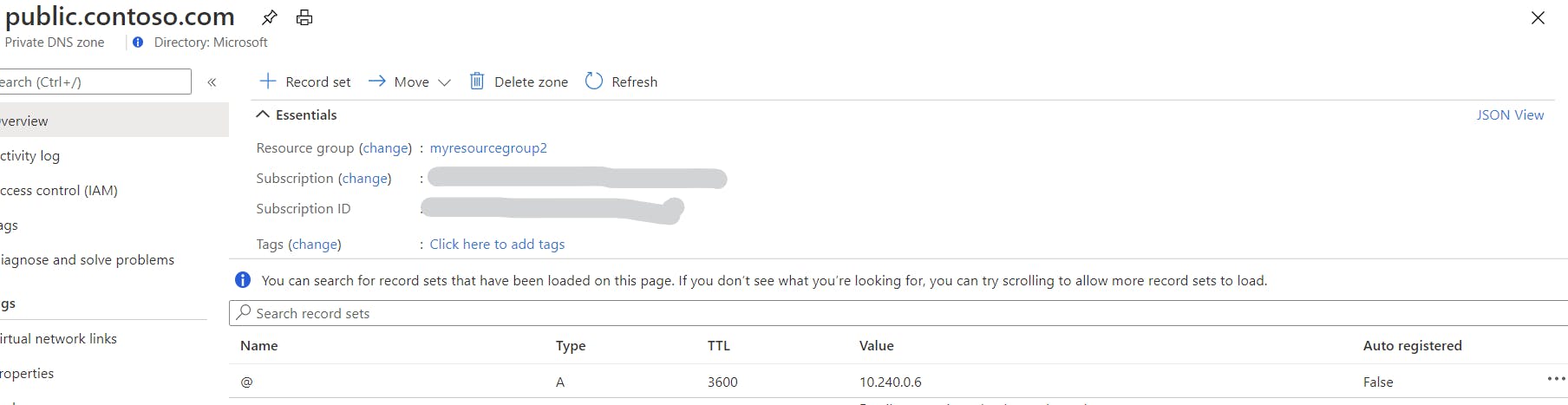

az network private-dns record-set a add-record -g ${RESOURCE_GROUP} -z ${PUBLIC_DNS_ZONE} -n @ -a <ip address of external ingress>

Those scripts will create A records for the domain in the Private DNS Zones

Since we are using internal ip addresses, the only way we can resolve those ips is from within that virtual network, vpn, bastion. I am going for the simplest option of running a pod inside the cluster.

#run a pod with a tweaked busybox image with curl

kubectl run -it --rm jumpbox-pod --image=radial/busyboxplus:curl

#when in the prompt we can now curl our private endpoints

#public -> external ingress -> non TLS -> returns secondapp

curl http://public.contoso.com

#private -> internal ingress -> TLS -> returns firstapp

curl -v -k https://private.contoso.com

Now internal consumers can get to our TLS protected endpoint using https://private.contoso.com as long as they are in the same virtual network, for other ways of resolution, like resolving from on-premises you will need a DNS forwarder running in Azure that will forward the DNS queries to the Azure DNS.

As for external consumers we want to protected that endpoint using Application Gateway.

3. Azure Application Gateway to expose service

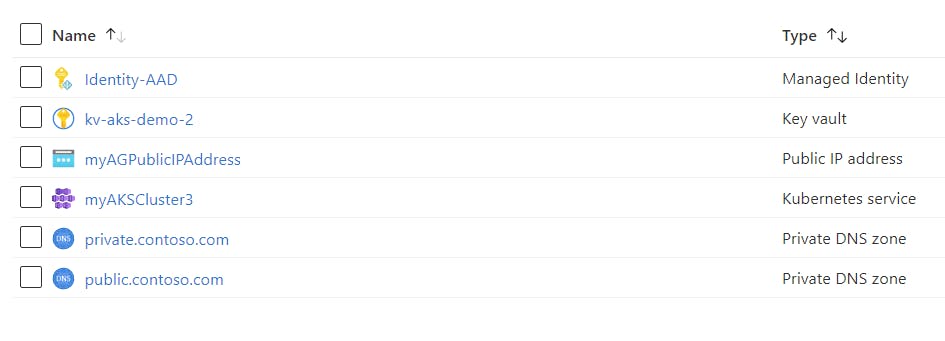

To create an Application Gateway we first need some infrastructure to be present.

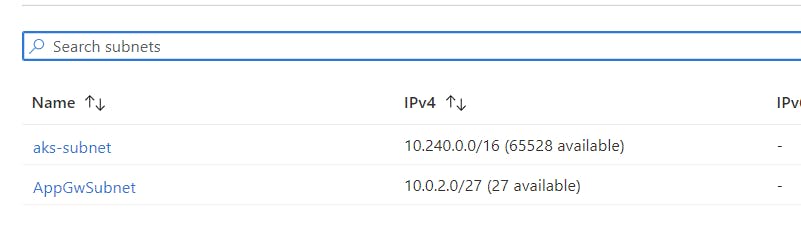

#first create a subnet in the existing AKS Vnet

az network vnet subnet create \

--name AppGwSubnet \

--resource-group ${NODE_RESOURCE_GROUP} \

--vnet-name <name of the vnet> \

--address-prefix 10.0.2.0/27

#Now lets create the public ip that will serve the public requests

az network public-ip create \

--resource-group ${RESOURCE_GROUP} \

--name myAGPublicIPAddress \

--allocation-method Static \

--sku Standard

Results in 2 subnets

And a Public Ip Address in your Resource Group

Now let's create the Application Gateway (disclaimer; since I did not create a vnet in the resource group where the AKS is I will have to deploy to the Node Resource Group)

export APPGW_NAME="myAppGateway"

#create application gateway

az network application-gateway create \

--name ${APPGW_NAME} \

--location ${REGION} \

--resource-group ${NODE_RESOURCE_GROUP} \

--capacity 1 \

--sku Standard_v2 \

--public-ip-address myAGPublicIPAddress \

--vnet-name <VNET Name> \

--subnet AppGwSubnet

#add backend pool ip address of our ingress

az network application-gateway address-pool create -g ${NODE_RESOURCE_GROUP} --gateway-name ${APPGW_NAME} -n AKSAddressPool --servers <ip address of your public ingress>

# One time operation, assign the identity to Application Gateway so we can access key vault

az network application-gateway identity assign \

--gateway-name ${APPGW_NAME} \

--resource-group ${NODE_RESOURCE_GROUP} \

--identity ${IDENTITY_RESOURCE_ID}

#get secret-id of your certificate from keyvault

versionedSecretId=$(az keyvault certificate show -n publicingresscert --vault-name $KEYVAULT_NAME --query "sid" -o tsv)

unversionedSecretId=$(echo $versionedSecretId | cut -d'/' -f-5) # remove the version from the url

#add the ssl certificate to app gateway

az network application-gateway ssl-cert create \

--resource-group ${NODE_RESOURCE_GROUP} \

--gateway-name ${APPGW_NAME} \

-n MySSLCert \

--key-vault-secret-id $unversionedSecretId

#create a new port for HTTPS

az network application-gateway frontend-port create \

--port 443 \

--gateway-name ${APPGW_NAME} \

--resource-group ${NODE_RESOURCE_GROUP} \

--name port443

#create an HTTP-LISTENER for HTTPS that uses your certificate

#this is your entry point

az network application-gateway http-listener create -g ${NODE_RESOURCE_GROUP} --gateway-name ${APPGW_NAME} \

--frontend-port port443 -n https --frontend-ip appGatewayFrontendIP --ssl-cert MySSLCert --host-name ${PUBLIC_DNS_ZONE}

#create a rule to map the http listener to the backend pool

az network application-gateway rule create \

--gateway-name ${APPGW_NAME} \

--name rule2 \

--resource-group ${NODE_RESOURCE_GROUP} \

--http-listener https \

--address-pool AKSAddressPool

# create a custom probe with the host name specified

az network application-gateway probe create -g ${NODE_RESOURCE_GROUP} --gateway-name ${APPGW_NAME} \

-n MyProbe --protocol http --host ${PUBLIC_DNS_ZONE} --path /

# upadte the http-settings to use the new probe

az network application-gateway http-settings update --enable-probe true --gateway-name ${APPGW_NAME} --name appGatewayBackendHttpSettings --probe MyProbe --resource-group ${NODE_RESOURCE_GROUP}

After deploying and configuring the Application Gateway we can now test our public endpoint, since this is not a domain I own we will need to use curl or change the local hosts file.

#curl the public endpoint resolve the ip and validate the certificates

curl -v -k --resolve $PUBLIC_DNS_ZONE:443:20..***** https://$PUBLIC_DNS_ZONE

#the response

* Server certificate:

* subject: CN=public.contoso.com

* start date: Nov 30 21:05:33 2020 GMT

* expire date: Nov 30 21:05:33 2021 GMT

* issuer: CN=public.contoso.com

* SSL certificate verify result: self signed certificate (18), continuing anyway.

#and the secondapp html is returned

We did it!

What did we do?

- Deployed two ingress controllers in our cluster, one for internal traffic the other for public traffic; the scenario is when you want to separate your workloads for different audiances

- Deployed one ingress with TLS, the certificate was stored in Key Vault and we were able to assign that secret to our ingress using AAD pod identity and CSI Secret Store Provider

- Deployed another ingress without TLS but still internal load balancer

- Deployed an Azure Application Gateway that does the TLS offloading and gets the certificate from Key Vault, it uses the same User Assigned Managed Identity that we used for the AAD pod identity to access Key Vault

- A private DNS zone so we can resolve DNS names from our virtual network

Final Notes

- It's easier/recommended to deploy Application Gateway using ARM or Terraform

- If you want to automatically add your private DNS Zones based on the Kubernetes ingresses you can use a tool like External-DNS